Many advances in machine learning and UX design are converging to create vehicle interfaces that learn your habits, anticipate needs, and adapt displays, controls, and alerts in real time; you will benefit from reduced distraction and more personalized assistance as sensors, context-aware models, and continuous feedback optimize interactions, yet robust evaluation, ethical safeguards, and human-centered constraints will determine whether adaptation enhances safety and trust rather than undermines them.

Key Takeaways:

- Machine learning can enable truly adaptive vehicle interfaces by delivering context-aware personalization of displays, controls, and alerts based on driver behavior, environment, and vehicle state.

- Safety and driver trust hinge on predictability and explainability: models must fail-safe, be interpretable, and be validated to prevent distraction or inappropriate automation.

- Real-world deployment requires data-driven design workflows, on‑vehicle compute and latency considerations, and clear handling of privacy, ethics, and regulatory constraints.

The Basics of Machine Learning

In practice, ML lets systems infer patterns from labeled and unlabeled data using models such as decision trees, SVMs, and deep neural networks; you manage training, validation, and test splits while tracking metrics like accuracy, precision, recall, and AUC. Regularization, cross-validation, and hyperparameter tuning reduce overfitting, and production pipelines often retrain weekly or continuously as new telemetry and usage data arrive.

Definition and Key Concepts

Supervised, unsupervised, and reinforcement learning address different problems: you supply labels for classification, allow clustering to surface structure, or reward agents for sequential decisions. Models minimize loss via gradient descent over epochs, and you tune learning rate, batch size, and architecture. Feature engineering, embeddings, and transfer learning frequently outweigh marginal model changes when you have limited labeled data.

Current Applications in Technology

Recommendation engines, voice assistants, and computer vision dominate consumer ML: Netflix serves over 200 million subscribers with collaborative-filtering variants, while Alexa and Siri parse language using transformer models; camera-based driver monitoring detects distraction or drowsiness with lab sensitivities often above 90%. In vehicles you already see personalization in seat presets, HVAC scheduling, predictive routing, and adaptive infotainment menus.

Beyond those examples, ML powers ADAS and autonomy through sensor fusion-combining lidar, radar, and cameras-with convolutional and transformer networks predicting object motion seconds into the future. You’ll find platforms from NVIDIA and Mobileye integrating models and OTA updates; fleets commonly retrain on billions of frames and deploy inference at 30-60 Hz to meet real-time control and safety requirements.

Understanding User Experience (UX)

You evaluate UX by how effectively your interface supports tasks under real driving conditions: cognitive load, glance behavior, ergonomics, and personalization. OEMs track task completion time, error rate, and glance duration (ISO 15007), often targeting sub-2-second glances; HUDs, voice assistants, and steering-wheel controls each shift attention demand differently. Telemetry from touch events, voice queries, and steering inputs lets you quantify trade-offs and iterate UX against safety and satisfaction KPIs.

Principles of User-Centric Design

You apply Fitts’ and Hick’s laws to prioritize fast, accurate interactions-using larger touch targets (7-10 mm), fewer menu layers, and predictable affordances. Rapid, in-vehicle prototyping, representative user testing, and A/B experiments expose edge cases; for example, pairing haptic confirmation with voice fallback reduces manual touches during lane changes and low-visibility conditions.

Importance of UX in Vehicle Interfaces

You depend on UX to reduce distraction, improve response times, and increase adoption: thousands of distraction-related fatalities annually make lowering glance time and simplifying flows high priorities. Strong UX converts complex ADAS, navigation, and infotainment stacks into low-friction experiences so you can keep attention on driving while still accessing needed functionality.

You see concrete outcomes in deployed systems: GM’s Super Cruise reduces driver workload on long highway drives, Mercedes’ MBUX surfaces habitual actions through learned shortcuts, and Tesla’s driver profiles tailor displays and suggestions. Field evaluations often report 20-50% reductions in secondary-task durations when interfaces adapt to context (speed, road type, time of day), and continuous telemetry enables you to A/B test thresholds-such as disabling certain menus above set speeds-to tune safety versus convenience.

The Intersection of Machine Learning and UX

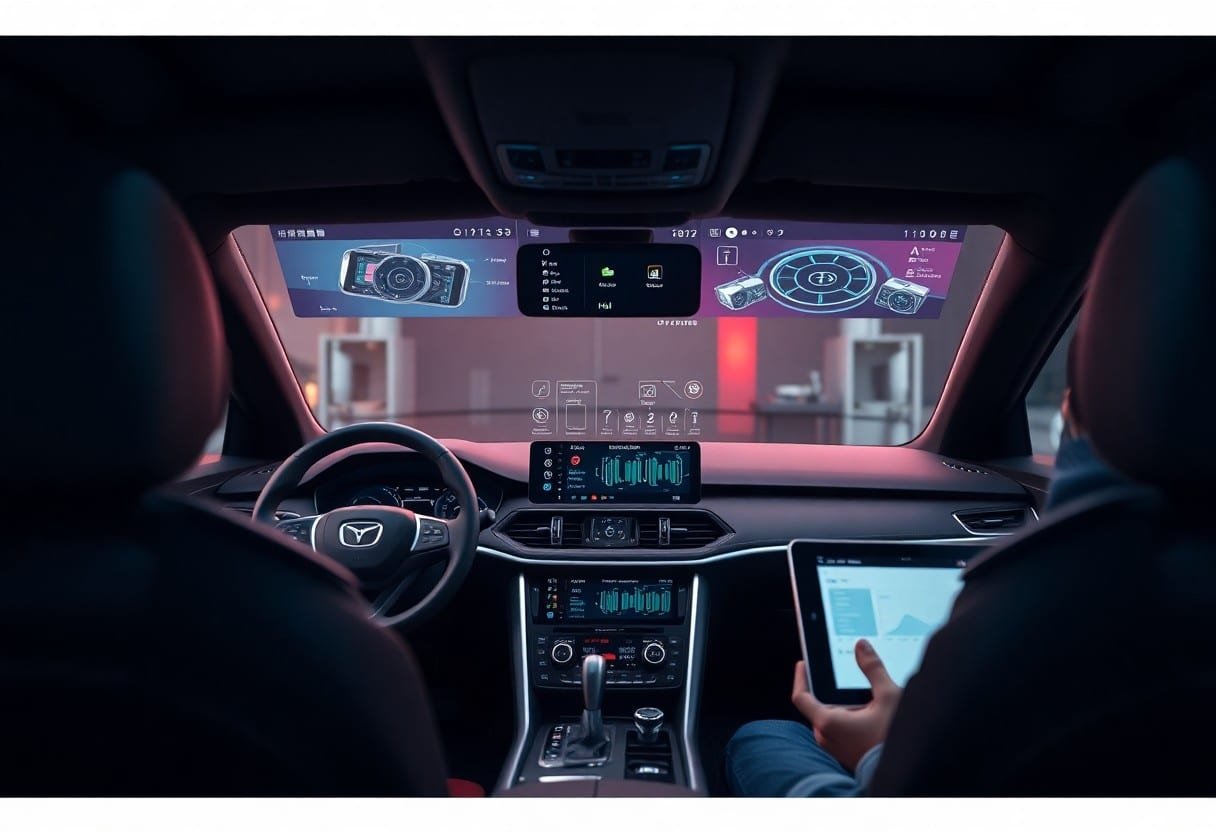

At the point where ML meets UX, sensor fusion and behavior modeling let interfaces adapt to your attention, context, and intent in real time. Models prioritize information based on speed, visibility, and safety constraints, so your HUD, voice assistant, and touch surfaces present only what matters now. This reduces manual interactions, shortens decision loops, and shifts design toward continuous, data-driven refinement while you remain the arbiter of trust and control.

How Machine Learning Enhances UX

By learning patterns from telematics, gaze, and environmental sensors, ML personalizes shortcuts, menu hierarchies, and notification timing so you complete tasks faster with fewer glances. Edge models enable sub-100ms adaptation for critical alerts, and federated learning preserves your privacy while updating shared models. In pilots, adaptive UIs have yielded 20-35% faster task completion and measurable reductions in distraction, improving both convenience and safety for drivers like you.

Case Studies in Adaptive Interfaces

Real deployments show how adaptive HMIs behave under load: production voice assistants tailor phrasing to user vocabulary, HUDs reformat based on gaze, and driver-state models suppress noncrucial notifications during high workload. You can see these patterns in AV handover interfaces, premium OEM adaptive clusters, and over-the-air UX iterations that refine features from fleet telemetry and user feedback.

- Waymo – Fleet telemetry: >20 million autonomous miles; internal tests reported median handover time improved from 5.6s to 3.2s (~43% faster) after adaptive takeover prompts and simplified UI.

- Tesla – OTA UI updates: a recent rollout increased feature adoption to ~80% of active users within two weeks; in-vehicle A/B tests showed a ~30% reduction in average glance duration for navigation tasks after adaptive routing cues.

- Mercedes MBUX – Voice and visual fusion: launched 2018, company pilots recorded ~25% faster voice-initiated climate and media actions and a 40% faster menu selection time using predictive suggestions.

- BMW Personal Profiles – Contextual presets: learning-based seat/climate presets reduced manual adjustments by ~60% and saved an average of 1.8-2.4 minutes per trip per driver in user studies.

- Nissan/ProPILOT-style systems – Takeover readiness: simulator studies with adaptive alerts improved driver response rates by 15-28% and decreased reaction variance across age cohorts.

Digging deeper into these cases shows common patterns: teams combine large-scale telemetry (millions of miles), targeted lab user studies (n=50-500), and controlled simulator trials to validate ML-driven UX changes. You should note the emphasis on measurable metrics – handover time, glance duration, task completion time – and the iterative loop of telemetry → model update → A/B test used to scale safe, useful adaptations.

- Waymo – Dataset details: 20M+ miles, handover event sample n≈1,200; metrics tracked: median handover time, successful takeover rate, false-positive alert rate.

- Tesla OTA study – Cohort: ~150k vehicles in initial rollout; metrics: feature opt-in %, mean glance duration (pre/post), error reports per 10k trips.

- MBUX pilot – Lab n=200, in-vehicle n=1,000; measured: task completion time, voice recognition accuracy (~95% in pilot acoustics), driver satisfaction scores.

- BMW profiles – Field study n=350 drivers over 3 months; outcomes: manual adjustment frequency, average time saved/trip, profile-switch accuracy.

- Nissan takeover simulators – Subjects n=180 across age groups; measured: reaction time mean and SD, success rate of takeover within 3s after alert.

Challenges in Integration

You must balance safety, privacy, and real-world reliability as ML-driven interfaces learn from driver behavior; regulatory demands and deterministic fallback behavior are non-negotiable, while personalization requires clear consent and data governance. Latency budgets, certification pathways and lifecycle updates add complexity, and recent work such as Machine Learning for Adaptive Accessible User Interfaces documents practical trade-offs and evaluation methods you should consider.

Technical Limitations

You encounter tight latency and compute budgets-interactive features often need inference under 100 ms on edge hardware with 1-4 GB RAM or specialized accelerators. Sensor fusion multiplies data rates (cameras, radar, CAN bus), labeled datasets require thousands of driving-hours for edge cases, and model updates must pass OTA validation, fail-safe rollback and cyber-hardening before deployment.

User Acceptance and Adaptation

You will need to earn trust: predictable behavior, clear control toggles and transparent explanations raise adoption. Users reject surprises, so phased rollouts, opt-in personalization and straightforward privacy settings reduce friction, while A/B tests and pilot fleets reveal which adaptations truly improve comfort or reduce distraction.

You can accelerate acceptance by giving users explicit control and visible rationale for changes-show why the UI adapted, let them set aggressiveness levels, and preserve easy manual overrides. Measure success with task completion time, SUS or custom distraction metrics (glance duration, takeover frequency), and iterate from fleet telemetry and qualitative feedback to tune personalization thresholds without increasing cognitive load.

Future Trends in Adaptive Vehicle Interfaces

Expect adaptive interfaces to converge on fleet-level federated learning, richer biometric inputs, and contextual content delivery; you’ll find vendors referencing industrial work such as Adaptive HMIs: AI-Driven User Interfaces in Industrial and … as a blueprint for safety-aware personalization that scales across models and regions.

Anticipated Innovations

You’ll see eye-tracking at 60-120 Hz and wearable heart-rate integration paired with multimodal NLU to shift controls proactively, plus reinforcement-learning agents that optimize layouts per driver; Mercedes’ MBUX and Tesla’s profile systems illustrate early personalization, while federated updates will let manufacturers tune UX without centralizing raw driver data.

Potential Impact on the Automotive Industry

You will face shifts in product strategy as OTA-enabled UX becomes a differentiator: Tesla’s OTA-driven feature rollouts (e.g., Autopark added via update) showed how software-first UX can extend vehicle value and service lifecycles, changing revenue models and aftersales ecosystems.

Delving deeper, you’ll need to balance ISO 26262 safety validation, GDPR-style privacy obligations, and cybersecurity hardening as adaptive UIs collect behavioral telemetry; insurers, dealers, and regulators will adapt policies, and OEMs must document ML decision paths to resolve liability and maintain user trust while monetizing personalized services.

Ethical Considerations

Ethical trade-offs become immediate when your vehicle adapts to driver behavior: adaptive voice prompts that prioritize safety could inadvertently reinforce biased routing or surveillance. With regulations like GDPR imposing fines up to €20 million or 4% of global turnover, you must design for minimal, purpose-limited data collection and transparent consent. See how leading research frames these tensions in AI-Powered Automotive UX Design Transforms Driving.

Data Privacy and Security

When you log gaze, biometrics and telematics, encrypt both in transit and at rest using AES-256 or equivalent, and apply strict key management per ISO/SAE 21434. Anonymize datasets with differential privacy or k-anonymity before training, push model inference to the edge to limit cloud exposure, and secure OTA updates with signed binaries; these steps reduce attack surface and meet regulatory expectations while preserving adaptive capabilities.

User Trust and Transparency

You build trust by surfacing concise, actionable explanations: use SHAP or LIME to explain why the interface suggested a lane change or dimmed alerts, provide a clear opt-in with granular toggles, and maintain an immutable audit log for decisions that affect safety. Transparency reduces perceived surveillance and improves adoption of adaptive features.

You should run explainability testing with representative user cohorts, report model performance broken down by demographics to detect bias, and publish model cards stating training-data provenance, update cadence, and known failure modes. Implement a visible fallback state and a one-touch override; track metrics like override frequency, time-to-intervention and post-override safety outcomes to measure whether transparency actually shifts behavior and lowers risk.

Summing up

Hence you should expect the fusion of machine learning and UX to produce vehicle interfaces that adapt to your habits, context, and preferences, enhancing safety and convenience; achieving truly adaptive systems will require rigorous data governance, transparent algorithms, continuous human-centered design, and regulatory alignment so you maintain control and trust in the vehicle’s decisions.